DataScientists: a blog about everything data related.

-

Part 2: The Multi-Step Retriever — Implementing Agentic Query Expansion

1. Introduction: The Death of the “Simple Search” In Part 1, we defined the blueprint for a production-grade Agentic RAG system. We moved away from passive retrieval toward a “reasoning-first” architecture. But even the best reasoning engine fails if the data fed into it is garbage. When a business user asks, “What’s our policy on…

-

Building Production-Grade Agentic RAG: A Technical Deep Dive – Part 1

Beyond Fixed Windows — Agentic & ML-Based Chunking Introduction: The RAG Gap The promise of Retrieval-Augmented Generation (RAG) is compelling: ground large language models in enterprise data, reduce hallucinations, enable real-time knowledge updates. But in practice, most RAG systems fail silently. They fail not because embedding models are weak or vector databases are slow, but…

-

Modernizing Data Warehouses for AI: A 4-Step Roadmap

It’s the same conversation in every boardroom and Slack channel: “How are we using LLMs? Where are our AI agents? When do we get our Copilot?” But for the teams in the trenches, the hype is hitting a wall of legacy infrastructure. The truth is that Modernizing Data Warehouses for AI is the invisible hurdle…

-

How Poor Data Engineering Corrodes GenAI Pipelines

Generative AI (GenAI) has captivated the world with its ability to create, synthesize, and reason. From crafting compelling marketing copy to assisting in scientific discovery, its potential seems boundless. However, the dazzling outputs often mask a critical vulnerability: the quality of the data underpinning these systems. When data engineering falters, issues of data quality, governance,…

-

Designing Production-Grade GenAI Automation

A dbt Ops Agent Case Study A small, well-instrumented workflow can turn dbt failures into reviewable Git changes by combining deterministic parsing, constrained LLM tooling, and VCS-native delivery — while preserving governance through traces, guardrails, and CI. This is a blueprint to build a first Production-Grade GenAI Agent. You can find the complete implementation and…

-

The Data Engineer Role in a ML Pipeline

Data engineers provide the critical foundation for every successful Machine Learning (ML) deployment, supporting the powerful models and insights that often grab headlines. While data scientists focus on model development and evaluation, data engineers ensure that the right data is collected, processed, and made available in a reliable and scalable way. 1. The Overlooked Hero…

-

AI Agent Workflows: Pydantic AI

Building Intelligent Multi-Agent Systems with Pydantic AI In the rapidly evolving landscape of artificial intelligence, multi-agent systems have emerged as a powerful paradigm for tackling complex, domain-specific challenges. Today, I’ll walk you through a sophisticated AI agent workflow that demonstrates how multiple specialized agents can collaborate to process, analyze, and generate insights from research literature…

-

The Ultimate Vector Database Showdown: A Performance and Cost Deep Dive on AWS

In the age of AI, Retrieval-Augmented Generation (RAG) is king. The engine powering this revolution? The vector database. Choosing the right one is critical for building responsive, accurate, and cost-effective AI applications. But with a growing number of options, which one truly delivers? To answer this, we put five popular AWS-hosted vector database solutions to…

-

Data Engineer – The Top 10 Books to read in 2023

Whether you are just starting out as a data engineer or you are an old pro it is always important to stay up to date on trends and technologies. In this post I will talk about the top 10 books every data engineer should read in 2023 to keep their skills fresh. Data Science from…

-

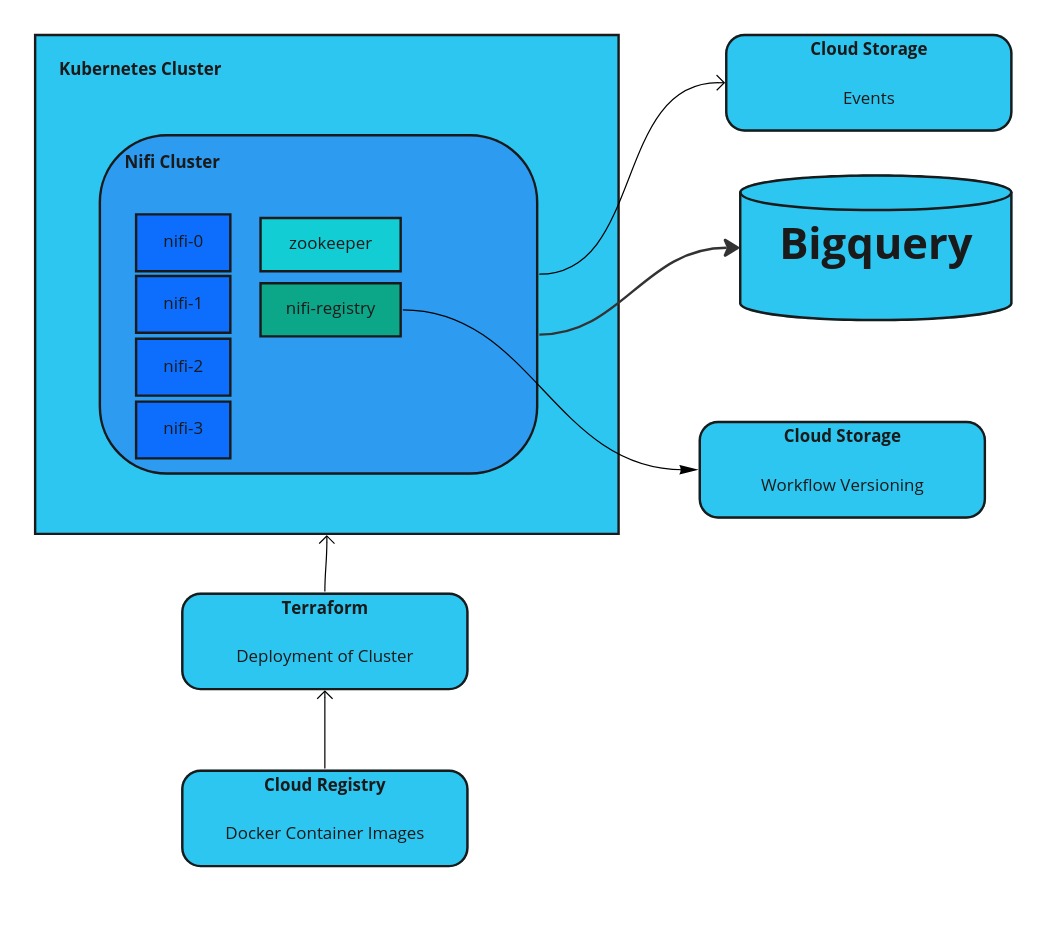

Apache Nifi on Google Cloud Kubernetes Engine (GKE)

Apache Nifi on GKE can be a good solution, if you want to have a low code solution for processing streaming data. If you set it up on GKE, a managed version of Kubernetes, you have a managed scalable environment and do not need to worry about handling the actual servers. Setup of the Apache…

Got any book recommendations?